What are Word Embeddings?

Word embeddings are vector representation of words that can be used as input (features) to other downstream tasks and ML models. Here is an article that explains popular word embeddings in more detail.

They are used in many NLP applications such as sentiment analysis, document clustering, question answering, paraphrase detection and so on. Pretrained models for both these embeddings are readily available and they are easy to incorporate into python code. Word2vec and Glove are popular pre-trained embeddings.

An important property of these embeddings is that words similar in meaning are close to each other. Another useful property is that vector arithmetic with embeddings are consistent with the semantic relationship between words. For instance, one could use these embeddings for analogy completion tasks such as Tokyo for France : Paris :: Japan : ? where the capital of relationship is captured through such arithmetic.

What are examples of Bias in word embeddings?

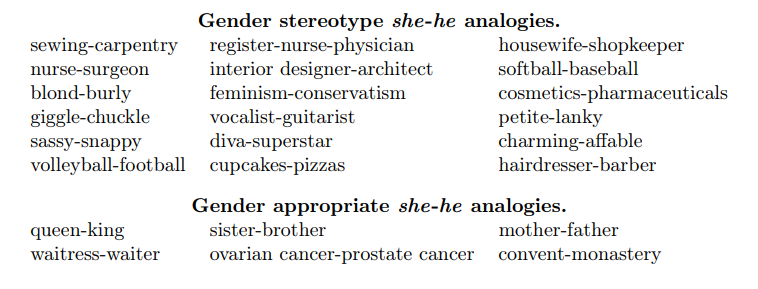

While word embeddings have sevaral useful properties, there are also some unintended biases in these embeddings. An example is gender bias. Some examples of gender bias include analogies such as man : computer programmer :: women : homemaker. Here are examples of other gender biased analogies that come up from word2vec embeddings from the google news dataset.This is also followed by gender appropriate analogies, for instance she : he :: sister : brother, since sister and brother have a gender (they are not gender neutral words).

How to detect Bias in word embeddings?

How to detect Bias in word embeddings?

There are two kinds of bias that the authors talk about. The direct bias comes up when some of the gender neutral words are closer to one gender specificic word than its counter part. For instance carpentry (a gender neutral word) is closer to he than she. An example of an Indirect bias is when words such as receptionist which are gender neutral are closer to softball than football (which are also gender neutral, but whose embeddings are biased since softball ends up being associated with females and football with males).

A way of debiasing word embeddings is to first find a gender subspace as advocated by this paper where the authors claim that the gender bias can be detected based on the direction of the vectors.

They first find a gender subspace and and are able to quantify how direct and indirect biases based on the component of each embedding that lies in the gender subspace. (The video explains this in a little more detail)

How to remove bias from word embeddings?

The following are two approaches to debias word embeddings proposed in this paper.

Neutralize: Remove the componant of the embedding along gender subspace to debias it.

Equalize: Identify a class of gender specific pair of words and ensure that every gender neutral word is equidistant from the gender specific word-pairs. Example babysitter should be equidistant from grandfather as grandmother and gal as guy.

Note: Code for the paper is available at https://github.com/tolga-b/debiaswe

References:

Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings, Advances in Neural Information Processing Systems 29 (NIPS 2016)

Authors: Tolga Bolukbasi, Kai-Wei Chang, James Y. Zou, Venkatesh Saligrama, Adam T. Kalai