MachineLearningInterview.com is a one-stop platform to prepare for Data Science interviews.

We want to help you crack interviews by doing the BEST you can do.

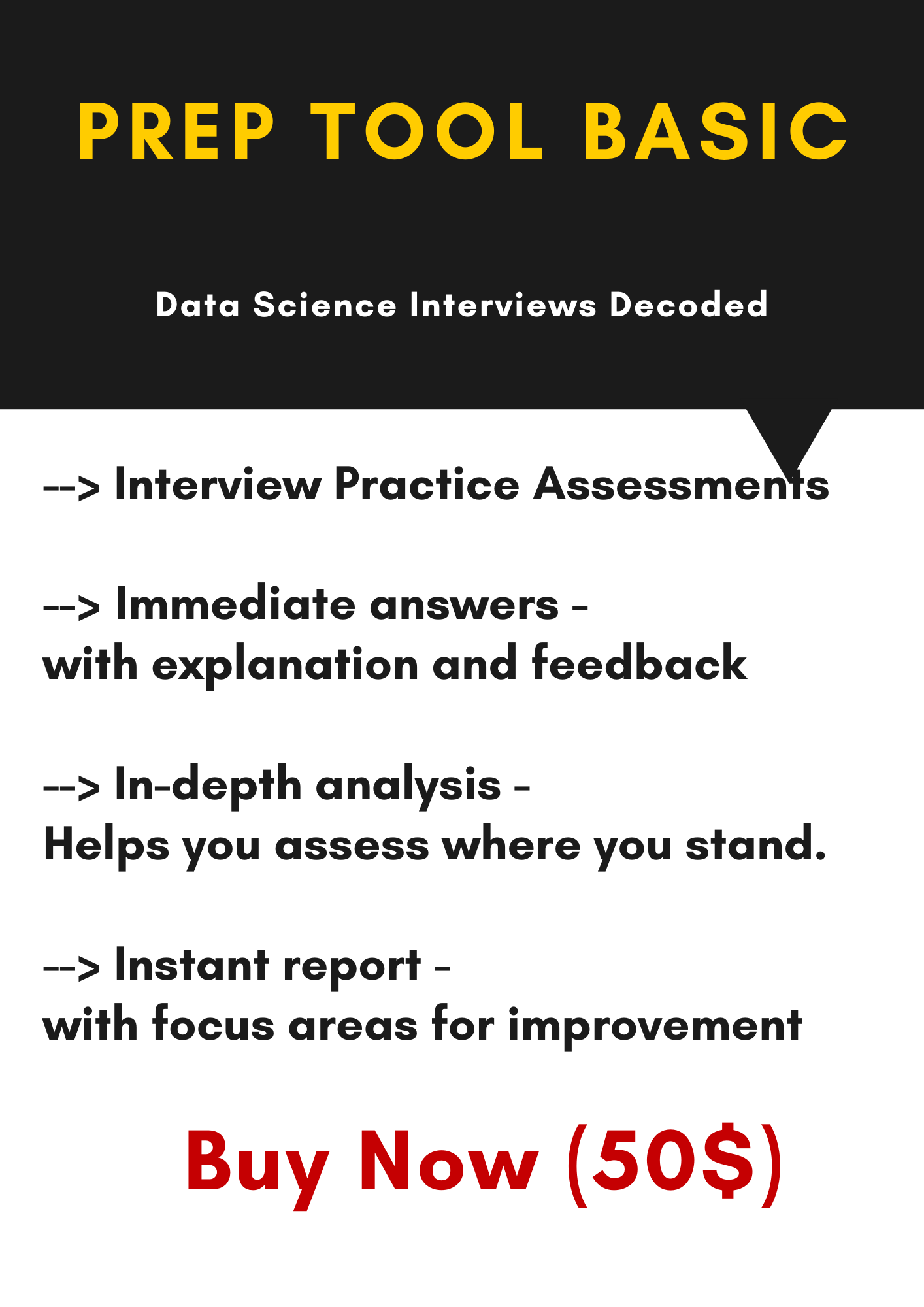

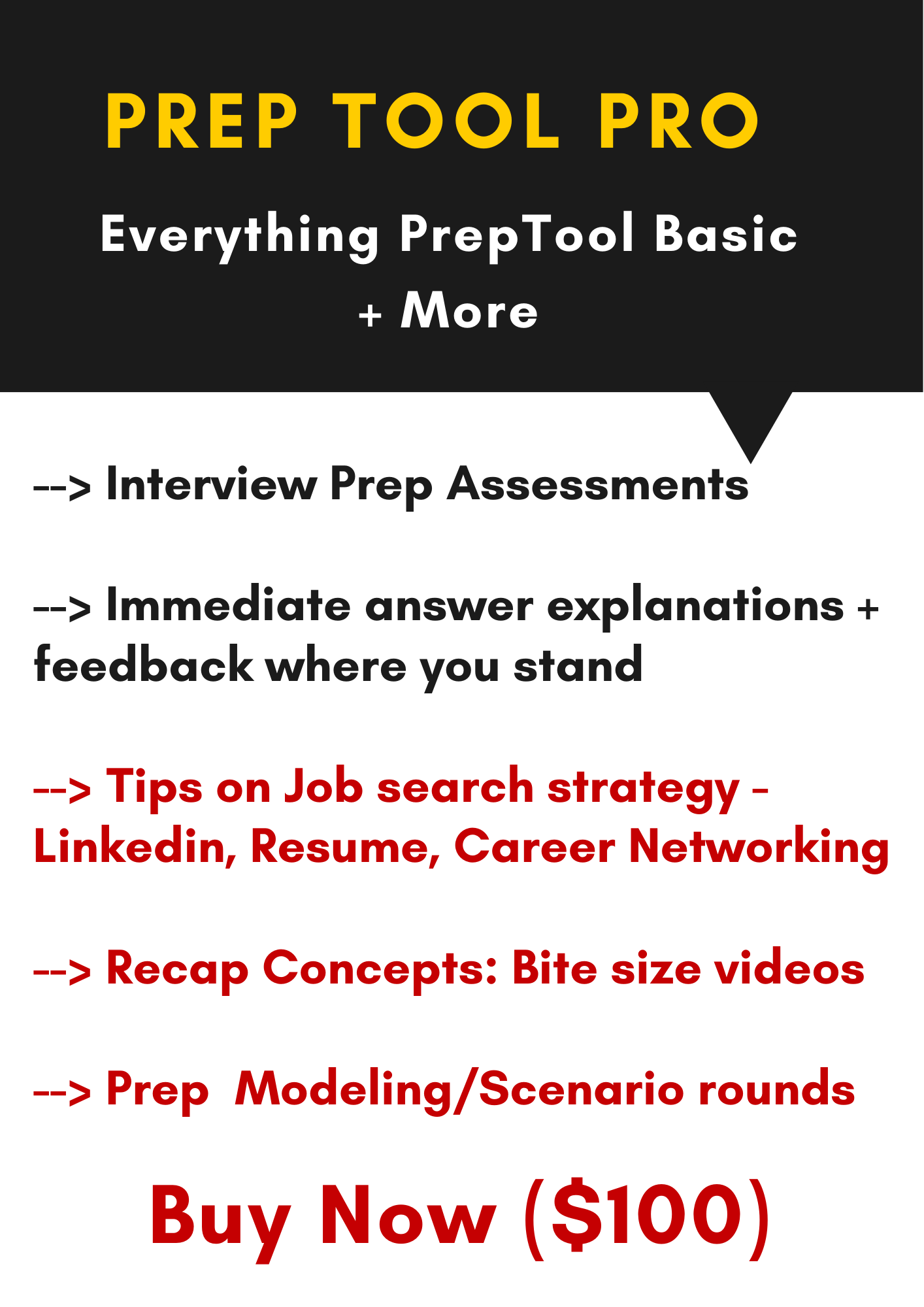

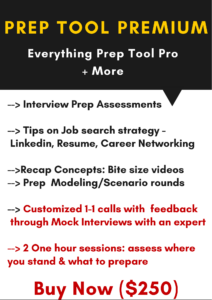

How do we help you crack your interviews ? We help you …

- Revise key ML concepts

- Practice talking about technical concepts

- Crack scenario based modeling interviews

- Do 1-1 Mock interviews with experienced interviewers

- Get more interviews through networking and position yourself better

Who has designed these tools and content ?

Lavanya holds a PhD in Machine Learning and a masters in Computer Graphics. She has worked for over 10 years with companies like Amazon, InMobi and Myntra. In addition, she has also done collaborative projects with ML teams at various companies like Xerox Research, NetApp and IBM. During her career she has interviewed over a 100 candidates. She has also done sevaral mock interviews for job aspirants and is trying to build tools to address common challenges candidates face during job interviews.

In the meantime, here are some free interview questions and answers ! (Many more interview questions and answers in the Question Bank in our menu). You have access to more free content by subscribing to our mailing list.

When are deep learning algorithms more appropriate compared to traditional machine learning algorithms?

- Deep learning algorithms are capable of learning arbitrarily complex non-linear functions by using a deep enough and a wide enough network with the appropriate non-linear activation function.

- Traditional ML algorithms often require feature engineering of finding the subset of meaningful features to use. Deep learning algorithms often avoid the need for the feature engineering step.

- Deep Learning algorithms do well when there is a lot of data to work with.

How do you design a system that reads a natural language question and retrieves the closest FAQ answer?

There are multiple approaches for FAQ based question answering

- Keyword based search (Information retrieval approach): Tag each question with keywords. Extract keywords from query and retrieve all relevant questions answers. Easy to scale with appropriate indexes reverse indexing.

- Lexical matching approach : word level overlap between query and question. These approaches might be harder to scale to do real time matching based on the scale of the question-answer dataset.

- Embedding of the query and of each FAQ question and pick the closest matching FAQ question based on the embedding distance.

- Could use common technique such as word2vec/glove and average word level embeddings to get sentence embedding

- Can find phrasal, document level embeddings.

- Intent based retrieval : Understand the intent of the question and attributes of the intent – works well if there are a specific set of intents and the problem is to classify the query into one of the appropriate intents. Tag questions with appropriate intents and attributes to retrieve the appropriate answer.

How can you increase the recall of a search query (on search engine or e-commerce site) result without changing the underlying algorithm ?

Since we are not allowed to change the underlying algorithm, we can only play with the search query itself. Here are some ways we can modify the search query to get better recall:

- We want to modify the query in a way that we get results relevant to the original query. If the query is “dark pants”, results would still be relevant if it contained “black pants” as black is dark. This means we need to find results for a synonymous query too. “black pants”, “black trousers”, “dark trousers” are synonymous to “dark pants”. We don’t need to change the algorithm. So one way of increasing the recall is to also search for synonymous query by replacing words with their synonyms.

- You could apply the same principle as above to the result of the original query. Instead of changing the query, you get first set of results from original query, then get results which are synonymous to first set of results.

What is negative sampling when training the skip-gram model ?

Skip-Gram Recap: model tries to represent each word in a large text as a lower dimensional vector in a space of K dimensions making similar words also be close to each other. This is achieved by training a feed-forward network where we try to predict the context words given a specific words.

Why is it slow: In this architecture, a soft-max is used to predict each context word. In practice, soft-max function is very slow in computation, specially for large vocabulary size.

Resolution :

- The objective function is reconstructed to treat the problem as classification problem where pairs of words : a given word and a corresponding context word are positive examples and a given word with non-context words are negative examples.

- While there can be a limited number of positive examples, there are many negative examples. Hence a randomly sampled set of negative examples are taken for each word when crafting the objective function.

This algorithm/model is called Skip Gram Negative Sampling(SGNS)

How will you build an auto suggestion feature for a messaging app or google search?

- Auto Suggestion feature involves recommending the next word in a sentence or a phrase. This is possible if we have built a language model on large enough “relevant” data.

- There are 2 caveats here –

- large corpus because we need to cover almost every case. This is important for recall.

- relevant data is useful for higher precision. As language model learnt on movie reviews may not be useful for an application like gmail which might have formal mails too, assuming movie reviews will be mostly written in natural and informal language.

- The data could be from google search queries or a user’s own chat. The language model could be built using probabilistic language modeling or neural language modeling.

What are the different ways of preventing over-fitting in a deep neural network ? Explain the intuition behind each

- L2 norm regularization : Make the weights closer to zero prevent overfitting.

- L1 Norm regularization : Make the weights closer to zero and also induce sparsity in weights. Less common form of regularization

- Dropout regularization : Ensure some of the hidden units are dropped out at random to ensure the network does not overfit by becoming too reliant on a neuron by letting it overfit

- Early stopping : Stop the training before weights are adjusted to overfit to the training data

Mail us at hello@machinelearninginterview.com if you have any feedback or find any interview questions you’d like us to answer!