Bias in Machine Learning models could often lead to unexpected outcomes. In this brief video we will look at different ways we might end up building biased ML models, with particular emphasis on societal biases such as gender, race and age.

Why do we care about Societal Bias in ML Models?

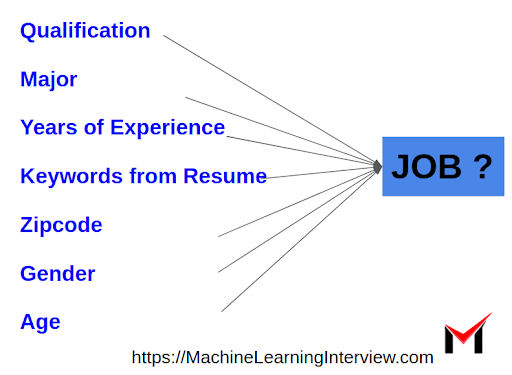

Consider an ML model being built for shortlisting job applications. Lets say there are many more male applications that got through compared to female.

If our prediction model uses sensitive attributes such as gender, race or age as features fed from the application form, it could end up learning a biased model where future female applicants are at a disadvantage. Clearly this is not the expected outcome.

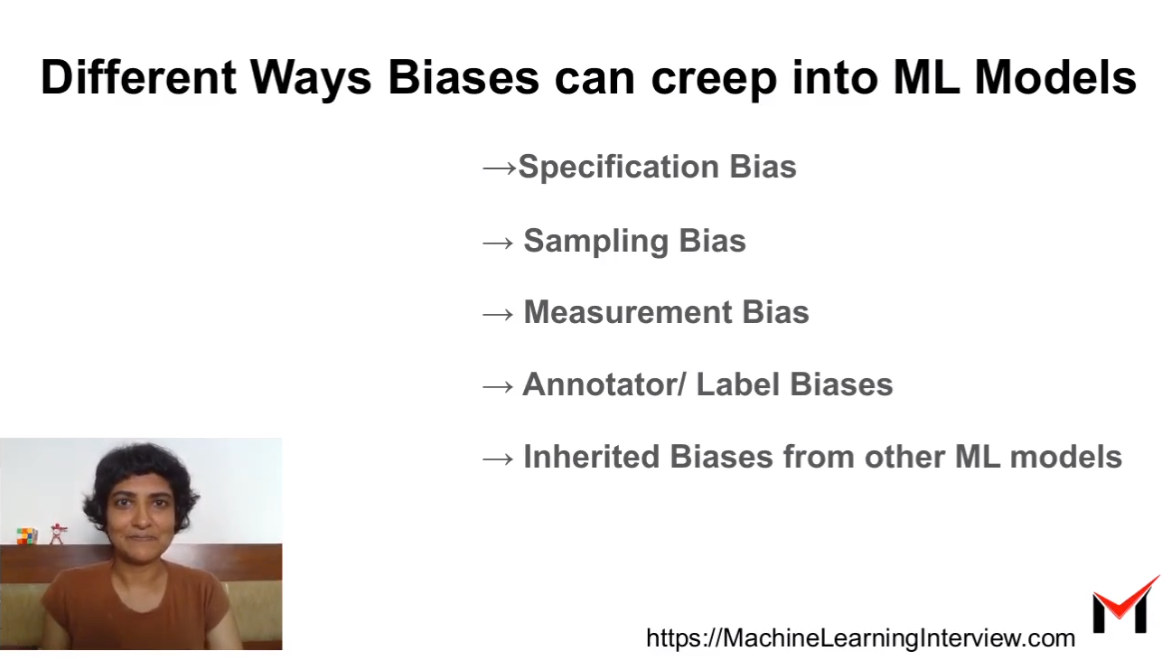

Types of Data biases

Types of Data biases

How do we avoid such socital biases from creeping into ML models? The first step is to understand how such biases are generated.

One of the ways we end up with a biased model is by feeding it with biased data. In this article we will focus on different ways in which we might end up with biased data.

Specification Bias:

Specification bias is the bias that arises during model design (Input and output). Some reasons why we might end up with specification bias are:

- Bad features: Using features that reflect bias

- Incorrect Discretization of Continuous Variables

An example of using unnecessary biased features is to use gender and race as features when making a model to shortlist job applications.

An important aspect to mention here is proxy features. Even if we avoid sensitive features that reflect bias, there could be other features that are highly correlated with the sensitive features.

Sampling Bias / Selection Bias:

This occurs when we do not adequately sampling from all subgroups. For instance, suppose there are more male resumes than female and the few female applications did not get through. we might end up learning to reject female applicants.

Similarly suppose there are very few resumes with major in “Information Systems”. Due to lack of representation of this major, a new applicant with major in information systems might end up seeing biased decision from the model.

An important aspect to note when talking about selection bias is Self-selection. Lets say we do an online survey on computer usage. We will end up getting very little representation from folks who do not use a computer in the first place.

Measurement Bias

Measurement bias occurs due to faulty inputs getting into our dataset. Some instances of measurement bias include:

- Faulty equipment measuring incorrect readings

- Error in noting down survey responses

Consider an example where we ask applicants to fill a form for Covid symptom triage. For a symptom like fever, suppose we have options to fill : High Fever, Low Fever, No fever, we might end up with bad data if the patient does not remember how high their fever was! We really need a “not sure” option…

Annotator Bias/ Label Bias

Human biases could creep into machine learning models from biased decisions in the real world that are used as labels. For instance, if there is a gender biased employer that shortlisted more males than females with similar qualifications, a model trained on the data would learn similar biases.

Similarly, if we use human annotators to create labels, biased views of the annotators could lead to biased label data.

Inherited Bias

Inputs to a Machine Learning model could come from the output of another ML model that is biased. This leads to biased inputs and finally biased outcome.

As an example, consider word embeddings. Biases in word embeddings creep into many NLP tasks. For instance, the popular word2vec embeddings have been shown to have biases that lead to analogies like the following: Man : Computer programmer :: woman : homemaker. To learn more about biases in word embeddings check out this article.

How to avoid Bias:

Knowing the existance of bias is the first step to try and eliminate the bias. It is important to be cognizant of the data biases and try to avoid them during data creation.