The joint probability distribution for the HMM model is given by the following equation where ![]() are the observed data points and

are the observed data points and ![]() the corresponding latent states:

the corresponding latent states:

![Rendered by QuickLaTeX.com \[p(x, y)=p(x|y)p(y)\,=\,\prod_{t=1}^{T}p(x_{t}|y_{t})p(y_{t}|y_{t-1})\]](https://machinelearninginterview.com/wp-content/ql-cache/quicklatex.com-da9e1e96bca390bd552f19abd2ecce7a_l3.png)

Before proceeding to answer the question on training a HMM, it makes sense to ask following questions

- What is the problem in hand for which we are training the above hidden Markov Model. Notice that the above model is generic and can be applied to any problem

- Once we know what problem we are solving using the above model, we need to know if we have labelled data.

- If we have labelled data: Note that while HMM is a latent variable model, in some cases it is possible to have labelled data, for popular problems such as POS tagging. If we have labelled data (with state labels given to us with observed emissions), we can use counting based heuristics to estimate the transition and emission probabilities using MLE. For instance, in the POS tagging case, the transition probabilities can be computed by how many times we transition from one tag to another, while the emission probabilities can be computed by the ratio #of times we observe a specific word given a tag/# of words with the tag.

- If we don’t have labelled data, then it becomes an unsupervised problem and we need to use EM algorithm to estimate the transition and emission probabilities.

- So for example, if the problem is to predict the PoS tags given a sequence and assume the data is given(not labelled), we use EM algorithm as follows

- In E step, we assume some posterior probabilities to begin with.

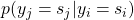

is estimated using maximum likelihood estimator and comes out to be

is estimated using maximum likelihood estimator and comes out to be

![Rendered by QuickLaTeX.com \[p(y_{j}|y_{i}) = \frac{number\,of\,occurrences\,of\,(y_{i},y_{j})}{number\,of\,occurrences\,of\,y_{i}}\]](https://machinelearninginterview.com/wp-content/ql-cache/quicklatex.com-e7c8dcdb41598195b0406781ab771a6c_l3.png)

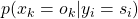

are also estimated using MLE and comes out as

are also estimated using MLE and comes out as

Initialize![Rendered by QuickLaTeX.com \[p(x_{k}|y_{i}) = \frac{number\,of\,occurrences\,of\,tag\,y_{i}\,generating x_{k}}{number\,of\,occurrences\,of\,y_{i}}\]](https://machinelearninginterview.com/wp-content/ql-cache/quicklatex.com-75e30fbbd71959881e511f215a617dec_l3.png)

and

and

- M step is used to update the probabilities/parameters of the model using MLE

![Rendered by QuickLaTeX.com \[p(y_{j}=s_{j} | y_{i}=s_{i}) = \frac{number\,of\,occurrences\,of\,(s_{i},s_{j})}{number\,of\,occurrences\,of\,s_{i}}\]](https://machinelearninginterview.com/wp-content/ql-cache/quicklatex.com-768c46fee53d855638652bcd31dcb5b6_l3.png)

![Rendered by QuickLaTeX.com \[p(x_{k}=o_{k} | y_{i}=s_{i}) = \frac{number\,of\,occurrences\,of\,tag\,s_{i}\,generating\,x_{k}}{number\,of\,occurrences\,of\,s_{i}}\]](https://machinelearninginterview.com/wp-content/ql-cache/quicklatex.com-8c34f92086cf46bb1b0495d40c2cf96c_l3.png)

- When we have some labeled data, we can get an initial estimate using MLE on the labelled data (the counting technique in 1), but can refine it with EM by augmenting with the unlabelled data.