Recap: Naive Bayes Classifier

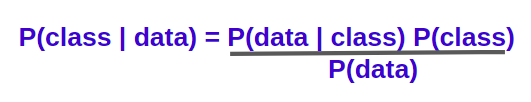

Naive Bayes Classifier is a popular model for classification based on the Bayes Rule.

Note that the classifier is called Naive – since it makes a simplistic assumption that the features are conditionally independant given the class label. In other words:

Naive Assumption:

P(datapoint | class) = P(feature_1 | class) * … * P(feature_n | class)

This assumption does not hold in a lot of usecases.

The probabilities used in the naive Bayes classifier are typically computed using the maximum likelihood estimate.

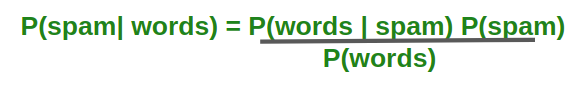

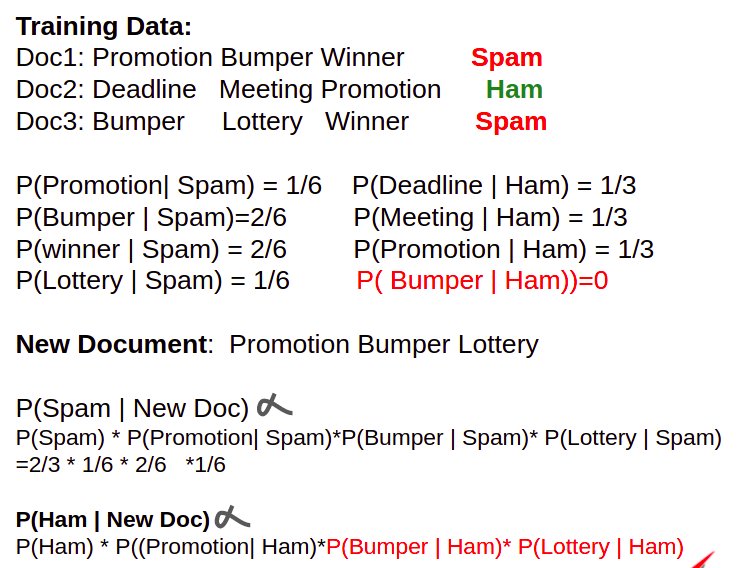

Lets take the example of spam detection.

Advantages of Using Naive Bayes Classifier

- Simple to Implement. The conditional probabilities are easy to evaluate.

- Very fast – no iterations since the probabilities can be directly computed. So this technique is useful where speed of training is important.

- If the conditional Independence assumption holds, it could give great results.

Disadvantages of Using Naive Bayes Classifier

- Conditional Independence Assumption does not always hold. In most situations, the feature show some form of dependency.

- Zero probability problem : When we encounter words in the test data for a particular class that are not present in the training data, we might end up with zero class probabilities. See the example below for more details: P(bumper | Ham) is 0 since bumper does not occuer in any ham (non-spam) documents in the training data.

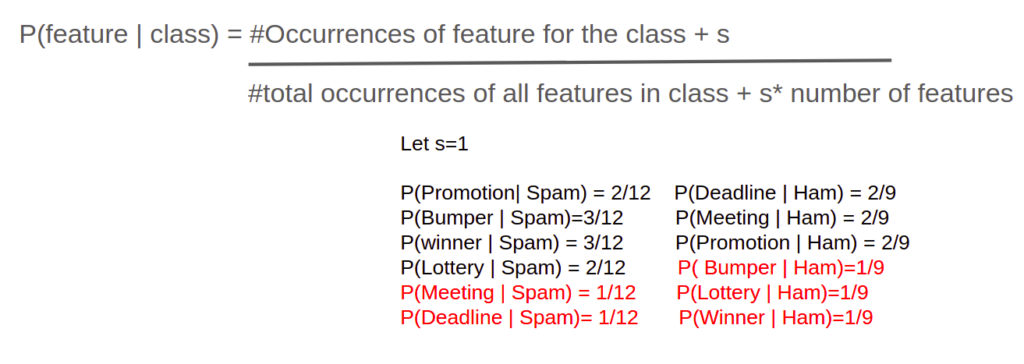

The zero probability problem can be Remedied through smoothing where we add a small smoothing factor to the numerator and denominator of every probability to avoid zero even for new words. See the example below to understand smoothing.

- Bad binning of continuous variables with Multinomial naive bayes: Gaussian Naive Bayes

- Not great for imbalanced data: Complement Naive Bayes

Take a look at the wikipedia article on naive Bayes classifier to learn more.